Creating a Scalable CTF Infrastructure on DigitalOcean

Posted on August 8, 2023 • 11 minutes • 2275 words

Table of contents

The post was originally published here .

Background Story

This blog post is written based on my experience of hosting the final round of the Inter-University Capture The Flag competition. The step-by-step guide will help you create your own CTF infrastructure on the DigitalOcean platform. However, the process shown throughout this article can be applied to other cloud computing platforms such as- Google Cloud, AWS, Azure, etc. At the end of the article, I shared the pricing and usage of our implemented infrastructure during the CTF.

Let’s Get Set Up

First, let’s go and create a DigitalOcean account. We can use this link to avail of a free trial of worth $200 for 60 days. To create a team, we’ll enter our card information for billing purposes.

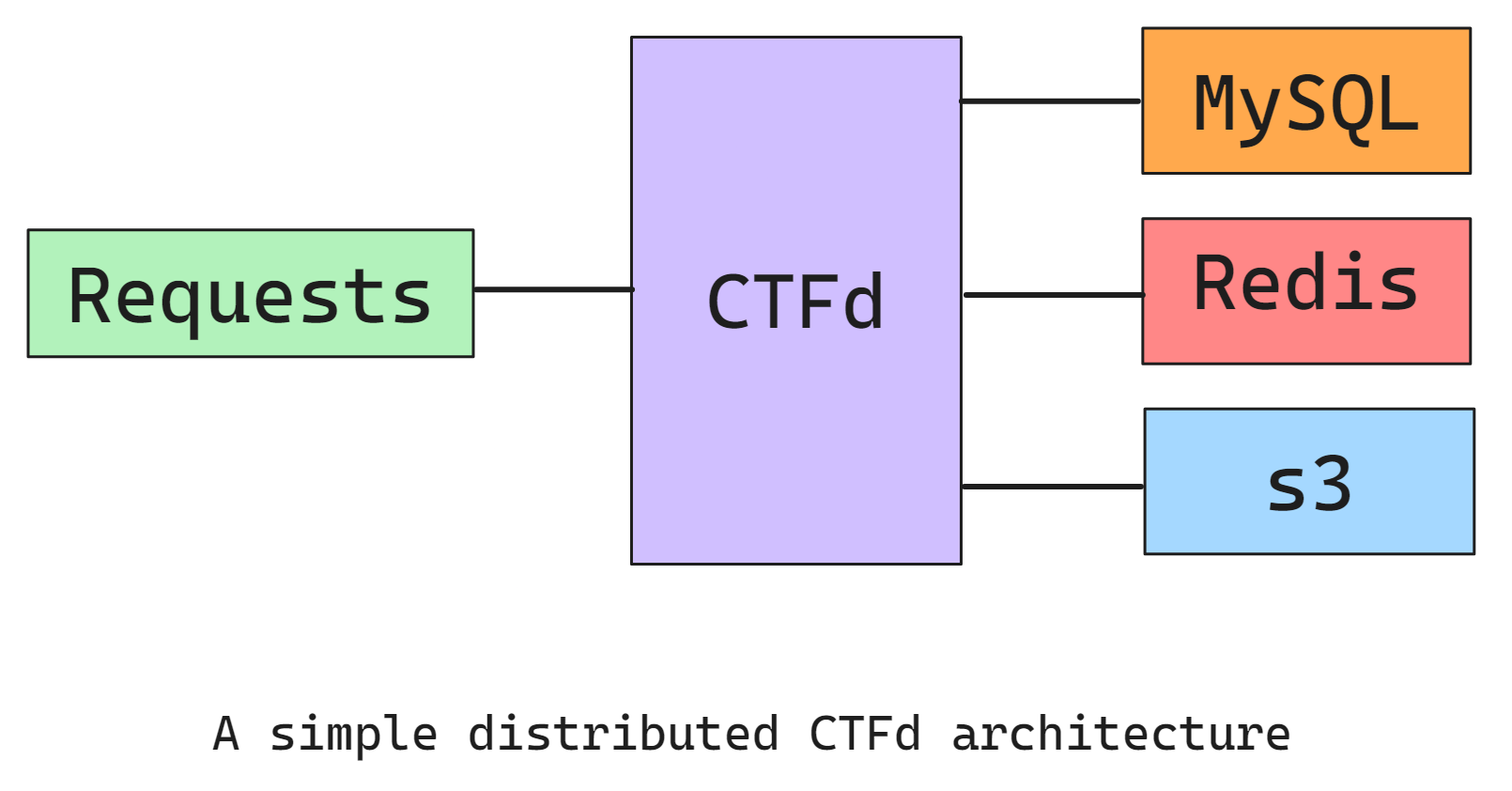

In this guide, we’ll use a distributed version of CTFd (I like to call it dCTFd)- unlike the default one where everything is hosted on the same server. Our web app, database, cache, storage, etc. will be hosted independently. We’ll use the following diagram as a simple reference as we create our CTF platform.

What Do We Need?

While creating distributed services, our primary goal will be to keep it simple and easy- so that anyone with a beginner’s experience can do it. To create different services, we’ll utilize different DigitalOcean services. As a starting point, we’ll require the following-

- Droplets - for hosting the CTFd web app

- Managed Database - for MySQL and Redis Cache

- Spaces - for storing and serving static files

- Load Balancer - for balancing loads among different machines

Now, we’ll create and set up those instances step by step.

Creating Droplets

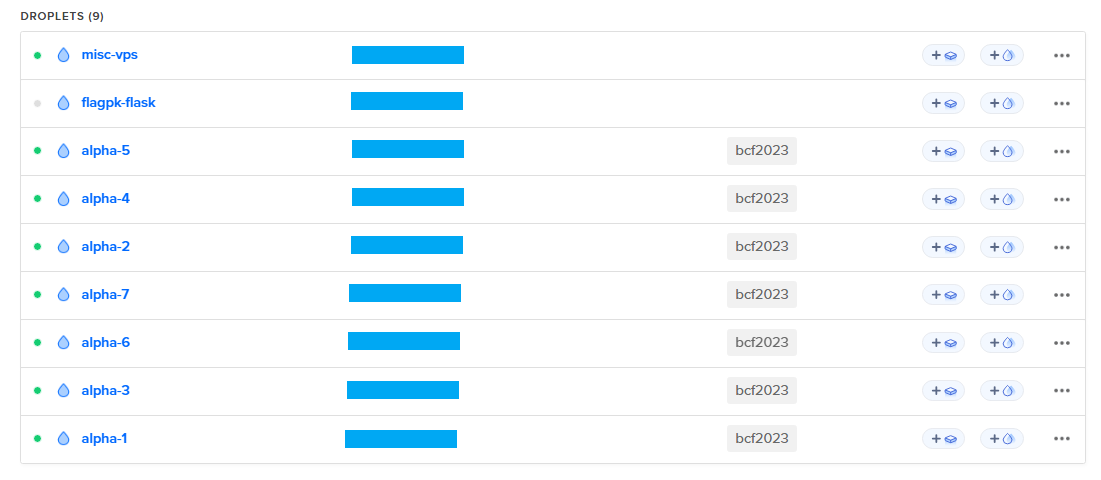

Let’s go and create some droplets for our CTFd app. We used the 4-vCPU 8GB RAM Regular CPU droplets for our purpose. For scaling and distributing the load across multiple droplets, we purchased a total of 7 droplets of the same configuration.

For our CTF challenges, we purchased one droplet having the configuration: 1-vCPU 2GB RAM, and for other miscellaneous/backup purposes, we purchased another 4-vCPU 8GB RAM droplet.

One might argue why we are using virtual machines (Droplets) instead of app engines or similar services. During the set-up phase for the competition, I tried multiple times to run the CTFd app using Flask/Gunicorn and Docker- and failed. As a result, I found hosting the CTFd through the given docker-compose file to be the most convenient and easiest solution.

Managed Database(s)

DigitalOcean MySQL

We need a MySQL instance for storing CTFd data (challenges, login, users, teams, etc.). We created a Basic 2GB RAM - 1vCPU - 30GB disk MySQL cluster using the DigitalOcean Managed Database service. One node/instance of the MySQL database allows 75 simultaneous connections.

The reason behind using DigitalOcean Managed Database service rather than hosting own instance was that- the managed databases are easy to scale up/down. During run-time, we can easily add more nodes to your database to handle the load.

After creating the database, we are given the credentials. The credentials contain the database server’s public and private (VPC) hostname. For our purpose, we are going to use the public (first one) hostname and credentials given.

SSL Certificate

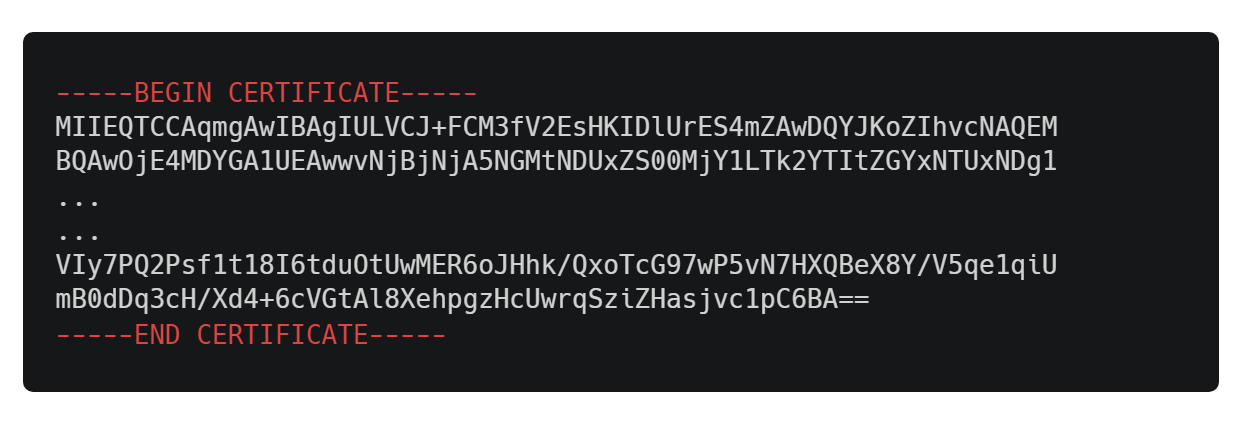

During the database creation, we are given a file called ca-certificate.crt which is required- because the MySQL server only accepts connections over SSL. Just download the file and keep it secure, we are going to use it later. The file looks somewhat like this-

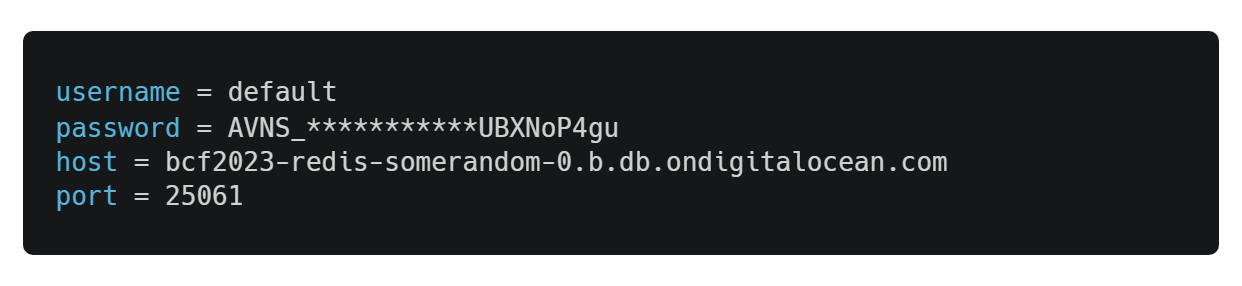

DigitalOcean Redis

CTFd uses Redis to cache the data so that it can serve the requests faster. Having a cache helps take the load off the database. We are going to create a Redis instance using the DigitalOcean Managed Database service.

For our purpose, we created a Basic 1GB RAM - 1vCPU - 10GB disk Redis database instance. Unlike MySQL, we did not need to scale the Redis instance. But if we were to, it would have been easier and the same as MySQL.

DigitalOcean Spaces (S3 Storage)

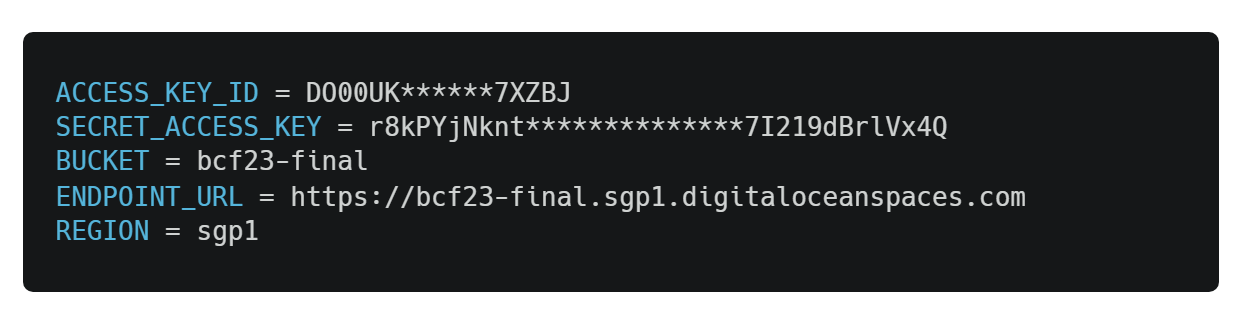

We created a storage bucket having a configuration of 250GB disk - 1TB bandwidth through DigitalOcean Spaces service. It cost us $5/month, which is kind of great. After creating the space, we are given the following information-

Except for ACCESS_KEY_ID and SECRET_ACCESS_KEY, the rest are given when we create the service. To create an access key, we need to go to the Control Panel and click API. From there, we can generate a new key. Both ACCESS_KEY_ID and SECRET_ACCESS_KEY will be generated automatically, and then we will store them safely.

Load Balancer

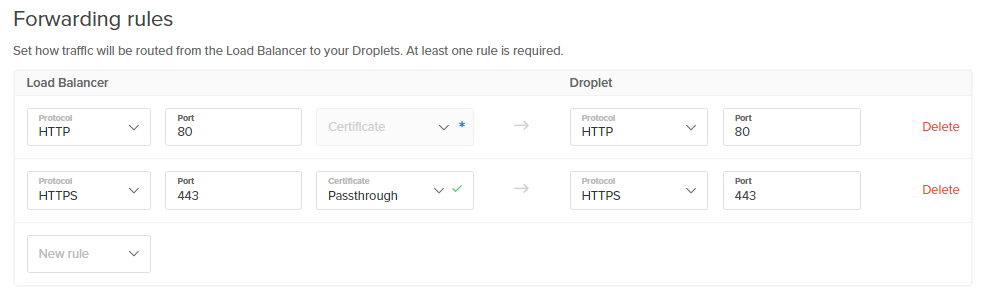

The DigitalOcean Load Balancer is an easy alternative to setting up our own Nginx server using another server. For our purpose, we used a 2-node 20,000 simultaneous HTTP connection configuration load balancer. During the creation, we can easily add our Droplets using their tag or name. For forwarding the connections, we set up our load balancer only for two protocols- HTTP and HTTPS as below-

After that, we gave our Load Balancer a name and created the service. Another reason to use DigitalOcean’s load balancer is that the load balancer can be scaled up/down by adding new nodes to the services during run-time. During the run-time, the load balancer will communicate with each of your droplets using their private IPs to get real-time health status.

Configuring CTFd App

We used CTFd- the most famous and widely-used CTF hosting platform for our competition. To avoid unexpected issues- we used the latest stable release- 3.5.3 . Since most of the time, CTFd is used by setting up Docker, some heavy works need to be done. First, let’s download the CTFd source code from GitHub, and then follow the instructions step-by-step:

1. docker-compose.yml

i) Gunicorn Workers

In the docker-compose.yml file, there’s a field called WORKERS under services > environment. It denotes the total number of workers that CTFd’s Gunicorn servers are going to use. According to their documentation

, the recommended number of workers follows the formula- WORKERS = 2 * NUMBER_OF_CORES + 1

Since we’re using 4-vCPU Droplets, let’s put WORKERS=9.

ii) Other Environment Variables

Now, let’s put the secret keys, database URL, usernames, etc under the environment option. Let’s put the key-value pairs below:

SECRET_KEY

Just create a random, hard-coded secret key for our CTFd instances that is going to be used for multiple purposes.

DATABASE_URL

Instead of putting the database user, password, and URL individually, let’s just go on and create the connection string for the following format:

DATABASE_URL**=**mysql+pymysql://<username>:<password>@<host>:<port>/<database-name>

REDIS_URL

For our Redis instance, let’s put the connecting string just like we did with our database.

REDIS_URL=rediss://<username>:<password>@<host>:<port>

Now, you might wonder why is it rediss and not redis. The reason is- like HTTPS, rediss protocol uses a secure SSL/TLS connection. That’s what the extra ‘s’ stands for.

UPLOAD_PROVIDER

Here, the upload provider is the one we should be using. Since we’re using DigitalOcean Spaces, we need to put s3 in that field, because it follows the same principle as AWS S3.

UPLOAD_FOLDER

This is the directory where CTFd uploads files if it uses the file system as the upload provider. Even though we are using s3 as our upload provider, we need to provide a directory with write permission for this. Why? Remember when you create a backup from the admin panel and CTFd returns a big ZIP file? In that case, CTFd doesn’t upload the ZIP to the upload provider, rather stores it locally in UPLOAD_FOLDER and serves you the file from there. So, let’s put /var/uploads in the field.

UPLOAD_FOLDER=/var/uploads

AWS_ACCESS_KEY

Let’s put the ACCESS_KEY_ID that we got from our S3 service here.

AWS_ACCESS_KEY_ID=DO00UK********7XZBJ

AWS_SECRET_ACCESS_KEY

Let’s put the SECRET_ACCESS_KEY that we got from our S3 service here.

AWS_SECRET_ACCESS_KEY=r8kPYjNknt**********7I219dBrlVx4Q

AWS_S3_BUCKET

Let’s just put our bucket’s name here-

AWS_S3_BUCKET=bcf23-final

AWS_S3_ENDPOINT_URL

This is where the trick lies. If you put your whole ENDPOINT_URL here, it won’t work. CTFd (or the boto3 client inside) expects you to put your S3 region’s data center’s URL in here. Since we chose Singapore as our data-center region, let’s put the following:

AWS_S3_ENDPOINT_URL=https://sgp1.digitaloceanspaces.com

AWS_S3_REGION

Let’s simply put the region’s data center’s name in this part.

AWS_S3_REGION=sgp1

iii) Delete Unnecessary Things

Now that we have set up our database, cache, and storage- let’s delete things from our docker-compose.yml file that we don’t need. So, let’s go on and delete/comment out the db, cache part of the file. Also, since we removed db, remove the depends_on key and value from the ctfd service since it no longers needs the db service.

After following the steps above, we will have something as below:

2. MySQL Certificate

Remember we were given a ca-certificate.crt file when we created our MySQL database instance? We are going to configure our CTFd app to use the certificate for a secure database connection.

i) docker-entrypoint.sh

Let’s copy our certificate into the conf/ directory. And add the following line in our docker-entrypoint.sh script so that Docker copies it when it builds the container image.

# Copy Certificate for MySQL

cp /opt/CTFd/conf/ca-certificate.crt /etc/ssl/certs/

This will cause our certificate to be copied to Docker’s OS’s /etc/ssl/certs/ directory. This is the recommended location for storing certificates in a Linux environment- especially Ubuntu.

ii) ping.py

In the base directory, find the Python file called ping.py. In the file, the initial database connection is made. Since our database uses a secure connection, we need to configure the connection to use the ca-certificate.crt file. Let’s add the following code segment to that file before the create_engine function is called.

connect_args = {

"ssl": {

"ssl_ca": "/etc/ssl/certs/ca-certificate.crt",

}

}

# Wait for the database server to be available

engine = create_engine(url, connect_args=connect_args)

print(f"Waiting for {url.host} to be ready")

Let’s go the extra mile and add the following debug messages to see whether our connection works or not in the same file.

while True:

try:

x = engine.raw_connection()

print("Connection:", x)

break

except Exception as e:

print("Exception:", e)

time.sleep(1)

If you have followed through, the ping.py file will look something like below:

3. Additional Changes

i) Dockerfile

Chances are our docker-entrypoint.sh file might not be executable when copied into Docker’s operating system. This happened to me- as a result, I had to add the following line to the Dockerfile

RUN chmod +x "/opt/CTFd/docker-entrypoint.sh"

ii) requirements.txt

The pymysql library used with CTFd may, or may not work with the external MySQL database. In that case, mysql-connector-python can help us out. So, let’s add mysql-connector-python library in our requirements.txt file.

Setting Up Cloudflare (Recommended)

This step is recommended if tons of people publicly participate in the CTF. Cloudflare’s DNS will not only help mitigate the incoming DDoS but also help you protect your server’s public IP addresses. Believe it or not- a publicly exposed IP address for your CTF can bring in disaster. The attackers can DDoS your servers through your IP since they’re not being routed through Cloudflare’s DNS. So, let’s go and configure our Cloudflare account.

1. Edge Certificate

If you don’t have an origin certificate, don’t worry. Just go to Cloudflare’s SSL/TLS > Overview menu and turn on “Flexible” mode. Also, make sure that you have created an “Edge Certificate”. That should be enough for our site’s security.

More information here: https://developers.cloudflare.com/ssl/

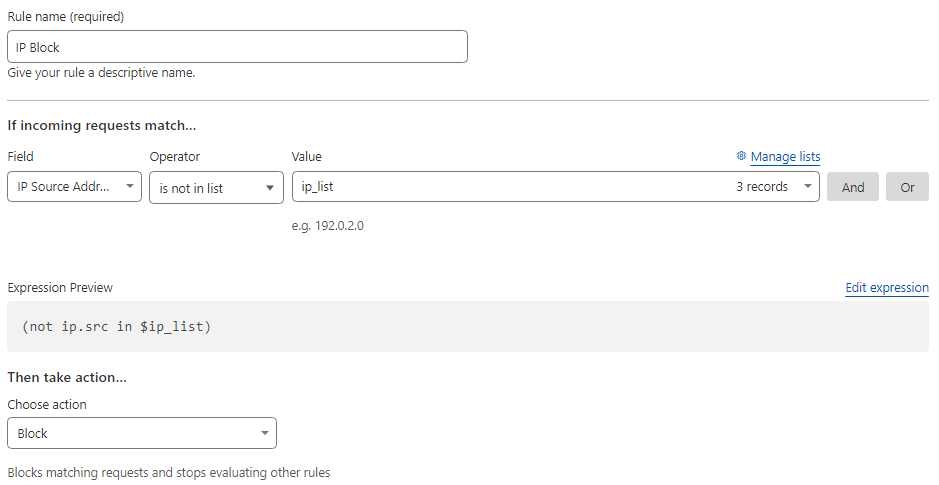

2. Web Application Firewall (WAF)

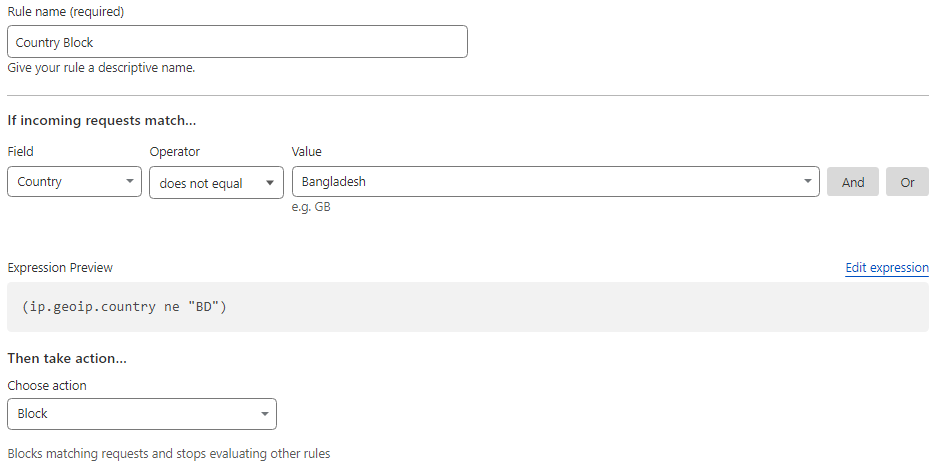

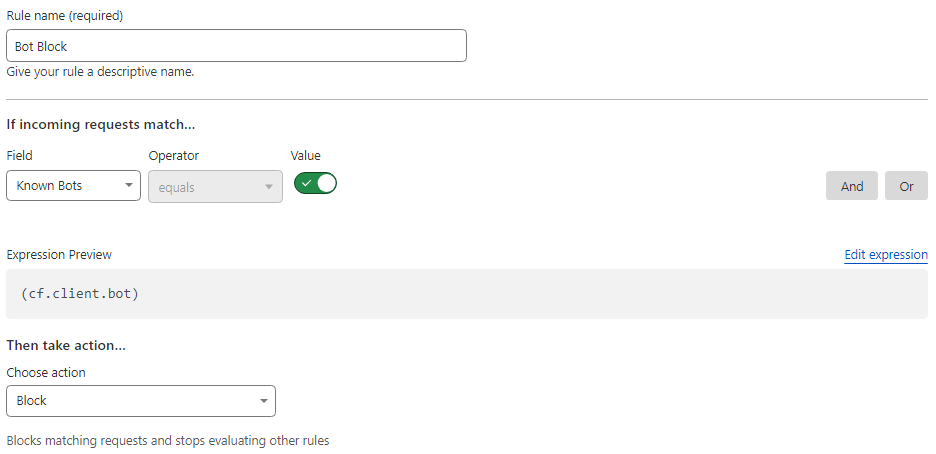

Now, let’s go to the Security > WAF menu and create firewall rules for our site. For our use case, we’re going to create three rules-

i) Country Block: Since our competition was to take place in Bangladesh, we created a firewall rule to only allowed Bangladeshi IP addresses.

ii) Bot Block: To block botnets and fuzzing tools, let’s create a rule for blocking known bots.

iii) IP Block: This rule was implemented as a last resort to stop incoming attacks. In this rule, we passed a list of IP addresses and blocked requests that came outside these IP addresses.

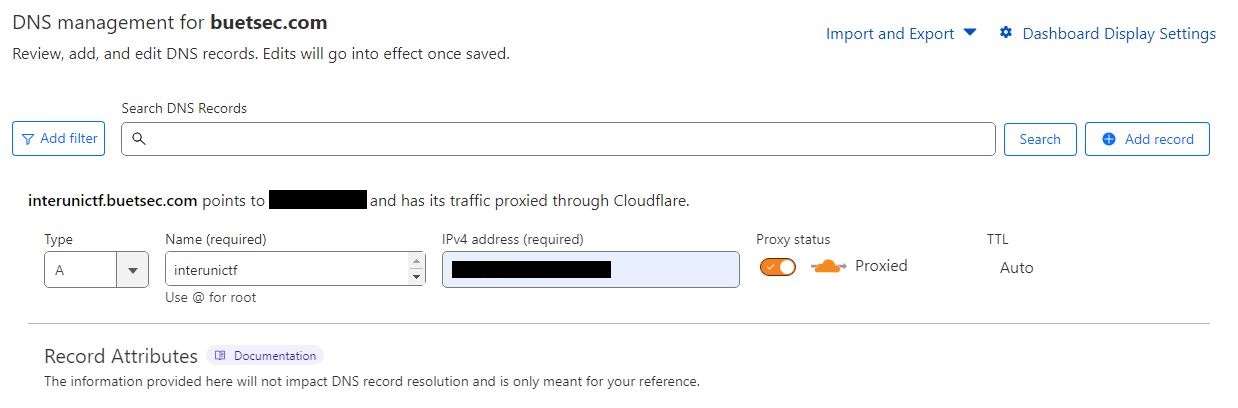

3. DNS Records

On the DNS page, let’s create an “A Record” for our sub-domain and enter our DigitalOcean Load Balancer’s IP address. Now, the traffic from Cloudflare’s DNS will go into our load balancer- and the load balancer will balance it evenly among the Droplets.

As always, we are going to set our site’s security level to “I’m Under Attack!” and the captcha challenge length to 30 minutes.

Wrapping Up

With all the services created and connected, our distributed CTFd platform is good to go. During our competition, we used a total of 9 Droplets, a 2-node MySQL database, a 1-node Redis cache, 250GB S3 storage, and a 2-node Load Balancer, along with the said Cloudflare protection. However, we didn’t enable the IP Block since we didn’t receive any malicious requests. We used the below architecture for our competition’s CTF platform-

During the development and competition phase, we used up a total of 885 hours of resources from our implemented architecture. The total cost added up to only $45.50- which is kind of amazing! The total cost breakdown of our used services during the competition is shown below-

DigitalOcean’s pay-as-you-go method and efficient costing kept it within our budget. The next time I’m hosting a CTF or deploying cloud services- I want DigitalOcean in my corner. To be honest, I found DigitalOcean’s pricing to be more flexible than most of the Bangladeshi hosting services that we checked out during the development phase.

Technologies are bound to change, services are meant to be implemented in different ways. I hope you will be able to implement the platform using other cloud services like- AWS, Azure, Google Cloud, etc. I hope this article has been a joy to read. If you’re new to hosting CTFs and my article helped you out please like, and share.

Follow my latest blogs here .

References

- Cover image from DigitalOcean Imgur

- Cypher Image from Dots Esports

- Diagrams were drawn using Excalidraw